The Rotation Group#

The rotation group ends up being a particularly powerful tool for studying three-dimensional systems, most famously atomic systems for which the Coulomb potential is rotationally invariant (independent of the direction of the separation between charged objects.)

In general rotations acting on vectors in \(\CR^d\) are defined as real linear ransformations that preserve the norm. Thus

where \(R\) is a \(d \times d\) matrix. Preservation of the norm means that

This last equality works if and only if \(R^T R = {\bf 1}\), that is, if \(R^T - R^{-1}\). Such matrices are called orthogonal.

Successive rotations are implemented by multiplying the corresponding matrices. You can convince yourself quickly that the product of two orthogonal matrices is itself orthogonal:

The corresponding group of rotation is thus the group of orthogonal matrices under matrix multiplication, which is known as \(O(d)\) or the “orthogonal group”.

Note that since

\({\rm det} R = \pm 1\). The matrices with opposite determinants are not continuously deformable into each other, and only matrices with positive determinant are continuously deformable to the identity matrix. The group of \(d\times d\) orthogonal matrices with unit determinant are called \(SO(d)\) or the “special orthogonal group”. It is this group which is usually taken to be the rotation group.

Rotations in three dimensions#

In this case,

is the parity matrix, and any member of \(O(3)\) can be written as a member of \(SO(3)\) times \(\Pi\). Thus we will focus on \(SO(3)\).

Finite rotations#

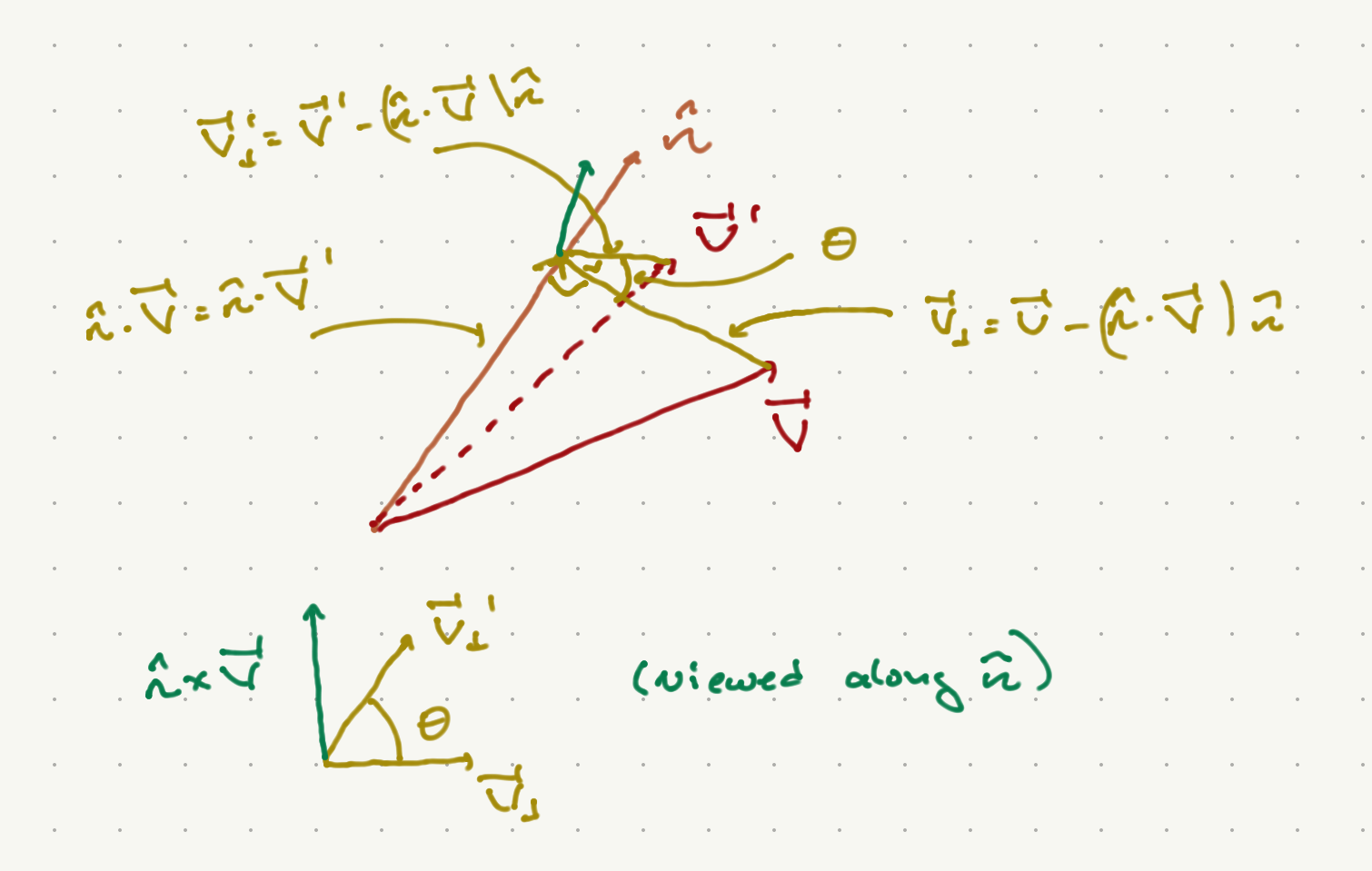

We can describe the general matrix more explicitly as follows. I calim any rotation can be described as the rotation of a vector about some axis by some angle \(\theta\). We will specify the axis by the unit vector \({\hat n}\).

As shown in the figure above, we can break up the vector \({\vec V} = {\vec V}_{\|} + {\vec V}_{\perp}\), where parallel and perpendicular are with respect to \({\hat n}\):

We will express \({\vec V}'\) in terms of its projection onto three orthonormal vectors: \({\hat n}\), \({\hat V}_{\perp} = \frac{{\vec V}_{\perp}}{||{\vec V}_{\perp}||}\), and a third vector orthogonal to both of them. Since \({\hat n}\times{\hat V}_{\|} = 0\), \({\hat n}\times{\hat V} = {\hat n}\times {\vec V}_{\perp}\) is orthogonal to both \({\hat n}, {\hat V}_{\perp}\). Thus, we can write

Now

Furthermore, we can see from the figure that

Putting all of this together, we have

Writing this as \((V')^I = R^I_J V^J\),

Here \(\epsilon_{IJK}\) is the totally antisymmetric tensor defined in the appendices. Note that I have raised and lowered various indices. In the present case, this operation does not change the numerical value of any of the vectors or tensors. I leave it as an exercise to show that this is an orthogonal matrix.

You can see that there is a 3-parameter family of such matrices, labeled by \(\theta\) and by the unit vector \({\hat n}\). As it happens, the demand of orthogonality leaves only 3 independent parameters specifying a \(3\times 3\) orthogonal matrix, and in fact all members of \(SO(3)\) can be written in this way.

Infinitesimal transformations#

For infinitesimal rotations, \(\cos \theta \sim 1 - \half \theta^2 + \cO(\theta^4)\), \(\sin \theta \sim \theta + \cO(\theta^3)\). Putting this into Equation (313) and keeping twerms only up to \(\cO(\theta)\), we have

Since this is linear in \({\hat n}\) and we can write a general vector \({\hat n}\) as a linear combination of \({\hat x}, {\hat y}, {\hat z}\), we can generate general infinitesimal transformations from rotations about each of these axes. Now wroting \(R(n) = 1 - i \theta J_I n^I/\hbar\), we have

We will see later why we use this normaization by \(\hbar\). Finally, recall that the group structure can be built from the commutation relations of the individual matrices. For these, you can show via brute force that

Given all of this, we can build up any finite rotation from these matrices by a succession of infinitesimal rotations. That is, consider a rotation about \({\hat n}\) by an angle \(\theta\). We can achieve this with \(N\) successive rotations about the same axis, each with angle \(\theta/N\). As \(N \to \infty\), each rotation is well approximated by

Now

Thus, we can write

The Group \(SU(2)\)#

The group of \(n\times n\) unitary matrices is called \(U(n)\). In generak the determinants of such matrices are pure phase. The special unitary group \(SU(n)\) consists of all such matrices with determinant \(1\). Since the determinant of the product oof matrices is the product of their determinants, this is a genuine subgroup of \(U(n)\).

We wish to describe the simplest special unitary group, \(SU(n)\). All such matrices take the form

where \(a, b \in \CC\), \(|a|^2 + |b|^2 = 1\). We can rewrite these in terms of the Pauli matrices:

where \(a = a_r + a_i\), \(b = b_r + i b_i\), and \(a_{i,r},b_{i,r} \in \CR\).

Now if we set \(a_i, b_r, b_i \in \cO(\eps)\) for \(\eps \ll 1\), the constraint \(|a|^2 + |b|^2 = 1\) demands that \(a_r \sim 1 + \cO(\eps^2)\). Thus, for infinitesimal transformations,

where \(\alpha = \sqrt{|a_i|^2 + |b_r|^2 + b_i|^2}\), and

If we define \({\vec J} = \frac{\hbar}{2} {\vec \sigma}\), we have the commutation relations

If we set \(\theta = 2\alpha\), then we have

which takes the same form as the infinitesimal rotation matrices in \(SO(3)\), for which \(J_I\) have the same commutation relations. It is tempting to state that \(SO(3)\) and \(SU(2)\) are the same group. As it happens, this is not quite true; rather (as we will see), \(SU(2)\) is the double cover of \(SO(3)\); that is if we map \(SU(2)\) to \(SO(3)\) the map is generally 2-to-1.